Defining Big Data Governance

Best practices for big data governance and compliance regulations – Big data governance is the set of policies, processes, and technologies designed to ensure the ethical, legal, and efficient use of an organization’s big data assets. It’s about establishing control and accountability over the entire data lifecycle, from collection and storage to analysis and disposal. Without robust governance, organizations risk data breaches, regulatory fines, and poor decision-making based on inaccurate or incomplete information.Effective big data governance relies on several core principles.

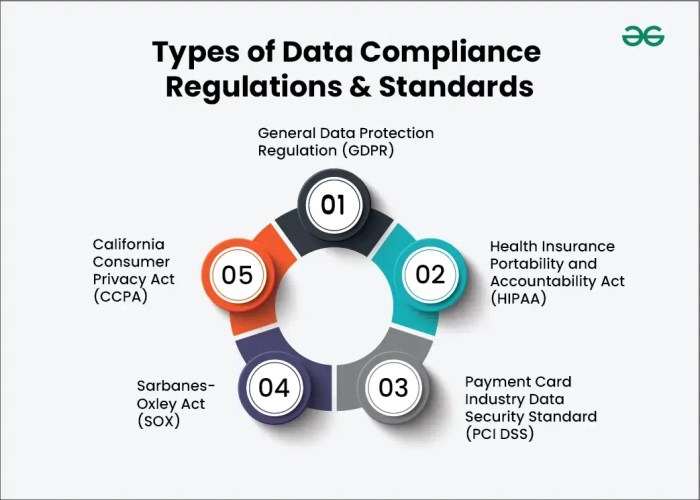

These include data quality, ensuring data accuracy and completeness; data security, protecting sensitive information from unauthorized access; data privacy, complying with regulations like GDPR and CCPA; data compliance, adhering to all relevant industry and legal standards; and data accessibility, enabling authorized users to access the data they need for their work. These principles work in concert to create a system that maximizes value while mitigating risk.

Core Principles of Effective Big Data Governance

Effective big data governance isn’t just about ticking boxes; it’s a holistic approach that integrates seamlessly into an organization’s overall strategy. Key principles include establishing clear data ownership and accountability, defining data quality standards and metrics, implementing robust data security measures, and fostering a data-driven culture throughout the organization. A successful governance framework also includes regular audits and reviews to ensure continuous improvement and adaptation to evolving business needs and regulatory changes.

Key Components of a Robust Big Data Governance Framework

A robust framework typically includes a comprehensive data catalog, detailing all data assets and their metadata; clearly defined data policies and procedures, outlining how data should be handled at each stage of its lifecycle; data security protocols, implementing measures to protect data from unauthorized access, use, disclosure, disruption, modification, or destruction; a data quality management program, establishing processes for monitoring and improving data accuracy and completeness; and a data governance team, responsible for overseeing the implementation and enforcement of the framework.

This team often includes representatives from various departments, including IT, legal, and business units.

Examples of Different Governance Models and Their Suitability

Several governance models exist, each with strengths and weaknesses depending on the organization’s size, structure, and industry. A centralized model, where a single team manages all aspects of data governance, is suitable for smaller organizations or those with a highly standardized data environment. A decentralized model, where different departments manage their own data, is better suited for larger, more complex organizations with diverse data needs.

A federated model, a hybrid approach combining centralized and decentralized elements, offers a balance between control and flexibility. The choice of model depends heavily on organizational context. For example, a financial institution might opt for a highly centralized model due to stringent regulatory requirements, while a large multinational corporation might favor a federated approach to allow for regional variations in data management practices.

Centralized versus Decentralized Governance Approaches: A Comparison

Centralized governance offers greater consistency and control, simplifying compliance and reducing the risk of data silos. However, it can be less responsive to the specific needs of individual business units and may lead to bottlenecks in decision-making. Decentralized governance empowers individual departments to manage their data, fostering greater agility and responsiveness. However, it can lead to inconsistencies in data management practices and increase the risk of data silos and non-compliance.

Governance Structure for a Hypothetical Organization

Consider a hypothetical healthcare provider handling sensitive patient data. A robust governance structure would include a centralized data governance committee composed of representatives from IT, legal, compliance, and clinical departments. This committee would oversee the development and implementation of data policies, procedures, and standards. Data ownership would be clearly defined for each data asset, with designated individuals responsible for data quality and security.

A decentralized approach could be used for managing less sensitive data, while highly sensitive patient data would be managed centrally to ensure the highest level of security and compliance. Regular audits and risk assessments would be conducted to identify and mitigate potential vulnerabilities. This structure ensures compliance with HIPAA and other relevant regulations.

Data Security and Privacy in Big Data: Best Practices For Big Data Governance And Compliance Regulations

The sheer volume, velocity, and variety of big data present unprecedented security and privacy challenges. Protecting this sensitive information requires a robust and multi-layered approach, encompassing technical safeguards, robust policies, and a strong commitment to compliance. Failure to adequately secure big data can lead to significant financial losses, reputational damage, and legal repercussions.

Critical Security Considerations in Big Data Management

Big data environments introduce unique security vulnerabilities. The distributed nature of these systems, often spanning multiple cloud providers and on-premise infrastructure, complicates security management. Data breaches can be catastrophic, exposing sensitive customer information, intellectual property, and potentially compromising entire business operations. Furthermore, the complexity of big data architectures makes it difficult to identify and address security weaknesses promptly.

Effective security requires a holistic strategy that considers the entire data lifecycle, from ingestion to archiving. This includes implementing strong authentication and authorization mechanisms, regular security audits, and proactive threat monitoring.

Common Vulnerabilities and Threats in Big Data Environments

Big data systems face a wide range of threats, including unauthorized access, data breaches, insider threats, malware attacks, and denial-of-service (DoS) attacks. Data breaches, in particular, can be devastating, resulting in the exposure of sensitive personal information, financial data, and intellectual property. Insider threats, stemming from malicious or negligent employees, pose a significant risk. Malware attacks can compromise data integrity and availability, while DoS attacks can disrupt services and prevent legitimate users from accessing the system.

The inherent complexity of big data architectures makes it challenging to detect and respond to these threats effectively.

Best Practices for Data Encryption and Access Control in Big Data Systems

Robust data encryption is crucial for protecting big data at rest and in transit. This involves encrypting data both within storage systems and during transmission across networks. Strong encryption algorithms, such as AES-256, should be used. Access control mechanisms, based on the principle of least privilege, should be implemented to restrict access to sensitive data based on user roles and responsibilities.

Regular security audits and vulnerability assessments are essential to identify and address security weaknesses. Implementing robust authentication mechanisms, including multi-factor authentication, is also crucial for preventing unauthorized access.

Data Breach Prevention Strategies

Preventing data breaches requires a proactive and multi-faceted approach. This includes implementing strong security controls, conducting regular security audits and penetration testing, and developing incident response plans. Employee training is vital to raise awareness about security threats and best practices. Regular security awareness training can significantly reduce the risk of insider threats. Data loss prevention (DLP) tools can help monitor and prevent sensitive data from leaving the organization’s control.

Investing in advanced threat detection systems, including security information and event management (SIEM) solutions, can help identify and respond to security incidents promptly. Regularly updating software and patching vulnerabilities is crucial to minimize the risk of exploitation.

Data Protection Regulations and Their Implications for Big Data Management

| Regulation | Jurisdiction | Key Implications for Big Data |

|---|---|---|

| GDPR (General Data Protection Regulation) | European Union | Strict rules on data collection, processing, and storage; consent requirements; right to be forgotten; data breach notification obligations. Impacts data governance, access control, and data retention policies. |

| CCPA (California Consumer Privacy Act) | California, USA | Grants California residents rights to access, delete, and opt-out of the sale of their personal information. Requires businesses to implement robust data security measures and provide transparency about data collection practices. |

| HIPAA (Health Insurance Portability and Accountability Act) | United States | Regulates the use and disclosure of protected health information (PHI). Impacts data security, access control, and data retention policies for healthcare organizations. |

| PIPEDA (Personal Information Protection and Electronic Documents Act) | Canada | Governs the collection, use, and disclosure of personal information in the private sector. Similar implications to GDPR and CCPA regarding data governance and security. |

Data Quality and Management

In the world of big data, where massive datasets fuel crucial decision-making, the quality of that data is paramount. Garbage in, garbage out – this adage rings truer than ever in the context of big data analytics. Poor data quality can lead to flawed insights, inaccurate predictions, and ultimately, costly business errors. Effective data quality management is therefore not just a best practice, but a critical necessity for any organization leveraging big data.Data quality in big data analytics refers to the accuracy, completeness, consistency, and timeliness of the data used for analysis.

You also can understand valuable knowledge by exploring building a successful business intelligence team and culture.

High-quality data ensures reliable and trustworthy results, enabling organizations to make informed decisions and gain a competitive edge. Conversely, poor data quality can lead to inaccurate analyses, misleading conclusions, and ultimately, flawed business strategies.

Ensuring Data Accuracy, Completeness, and Consistency

Maintaining data accuracy, completeness, and consistency requires a multi-pronged approach. Data validation techniques, implemented at various stages of the data pipeline, are essential. This includes using data profiling tools to identify anomalies and inconsistencies early on, and employing data cleansing techniques to correct errors and fill in missing values. Implementing standardized data entry procedures and regular data audits also significantly contribute to maintaining data quality.

Furthermore, data governance policies and procedures should clearly define data quality standards and responsibilities, ensuring accountability across the organization.

Data Cleansing and Deduplication Strategies

Data cleansing involves identifying and correcting or removing inaccurate, incomplete, irrelevant, or duplicate data. This process can involve techniques such as standardization (e.g., converting date formats to a consistent standard), parsing (e.g., extracting relevant information from unstructured text), and imputation (e.g., filling in missing values using statistical methods). Deduplication, on the other hand, focuses on identifying and removing duplicate records from a dataset.

This often involves comparing records based on various key fields and employing techniques like fuzzy matching to handle variations in data entries. For instance, a company might deduplicate customer records to ensure each customer has only one entry in the database, preventing skewed analytics based on duplicate entries. The process usually involves identifying and merging duplicate entries, or selectively removing them based on established criteria.

Data Quality Monitoring Tools and Techniques

Several tools and techniques can help monitor data quality throughout the data lifecycle. Data profiling tools automatically analyze datasets to identify potential quality issues, such as missing values, inconsistent data types, and outliers. These tools often provide comprehensive reports detailing data quality metrics, enabling proactive identification and resolution of problems. Real-time data quality monitoring dashboards, providing up-to-the-minute insights into data quality, are also increasingly used.

These dashboards visualize key metrics, allowing for immediate action if quality drops below predefined thresholds. For example, a dashboard might display the percentage of complete records, the number of unique values per field, or the frequency of errors. Regular data audits, performed by dedicated teams or external auditors, further enhance data quality assurance.

Implementing Data Quality Checks in a Big Data Pipeline

Implementing effective data quality checks within a big data pipeline is a crucial step toward ensuring data reliability. A structured, phased approach is recommended:

- Data Profiling and Discovery: Begin by profiling the incoming data to understand its structure, identify potential issues, and establish baseline quality metrics.

- Data Cleansing and Transformation: Implement data cleansing procedures to address identified issues, such as missing values, inconsistencies, and duplicates. Transform data into a consistent format suitable for analysis.

- Data Validation: Implement validation rules to ensure data integrity and consistency throughout the pipeline. This may involve checks on data types, ranges, and relationships between different data fields.

- Data Monitoring and Alerting: Set up monitoring systems to track key data quality metrics in real-time. Configure alerts to notify relevant personnel when quality thresholds are breached.

- Data Governance and Documentation: Establish clear data governance policies and procedures to ensure data quality is consistently maintained. Document all data quality checks and processes for auditability and traceability.

By following these steps, organizations can effectively integrate data quality checks into their big data pipelines, ensuring the reliability and trustworthiness of their data-driven insights.

Compliance with Relevant Regulations

Navigating the complex world of big data necessitates a robust understanding and adherence to relevant regulations. Failure to comply can lead to hefty fines, reputational damage, and loss of customer trust. This section Artikels key regulations, the consequences of non-compliance, and best practices for ensuring your big data operations remain legally sound.Big data’s inherent nature – encompassing vast quantities of diverse data types – often intersects with sensitive personal information, necessitating strict adherence to various regulations.

Understanding these regulations and implementing appropriate controls is crucial for any organization dealing with significant data volumes.

Key Regulatory Requirements for Big Data Handling

Several regulations significantly impact how organizations handle big data. Understanding their specific requirements is paramount. For example, the Health Insurance Portability and Accountability Act (HIPAA) in the United States governs the privacy and security of protected health information (PHI). Similarly, the Payment Card Industry Data Security Standard (PCI DSS) mandates specific security measures for organizations handling credit card information.

The General Data Protection Regulation (GDPR) in Europe sets a high bar for data protection and privacy rights for individuals within the EU. These regulations often overlap, demanding a holistic approach to compliance. Non-compliance with these regulations can lead to severe penalties, including significant fines and legal action.

Implications of Non-Compliance with Data Regulations

Non-compliance with regulations like HIPAA, PCI DSS, and GDPR carries severe consequences. Financial penalties can be substantial, reaching millions of dollars in some cases. Beyond the financial repercussions, reputational damage can be devastating, eroding customer trust and impacting business relationships. Legal battles can be protracted and expensive, diverting resources away from core business operations. Furthermore, data breaches resulting from non-compliance can expose sensitive information, leading to identity theft, financial loss for individuals, and significant legal liabilities for the organization.

For instance, a company failing to comply with GDPR could face fines up to €20 million or 4% of annual global turnover, whichever is higher.

Best Practices for Ensuring Compliance with Data Privacy Regulations

Implementing robust data governance is central to achieving compliance. This includes establishing clear data ownership, access control policies, and data retention guidelines. Regular data audits and vulnerability assessments are essential for identifying and mitigating risks. Employee training on data privacy and security best practices is crucial. Investing in strong data encryption and anonymization techniques protects sensitive information.

Finally, implementing a comprehensive incident response plan helps minimize the impact of data breaches. Proactive monitoring and regular updates to security protocols are vital to stay ahead of evolving threats.

The Role of Data Governance in Meeting Regulatory Obligations, Best practices for big data governance and compliance regulations

Data governance acts as the cornerstone of regulatory compliance. A well-defined data governance framework establishes clear roles and responsibilities for data management, ensuring accountability throughout the organization. It dictates how data is collected, stored, processed, and ultimately disposed of, aligning with regulatory requirements. By implementing robust data governance practices, organizations can streamline compliance efforts, reduce risks, and demonstrate their commitment to data protection.

A comprehensive data governance program provides a structured approach to managing data lifecycle, thus simplifying compliance with various regulations.

Creating a Compliance Checklist for Big Data Operations

A comprehensive checklist is crucial for ensuring ongoing compliance. This checklist should cover key aspects, including data mapping to identify sensitive information, regular security assessments and penetration testing, implementation and maintenance of access control policies, data retention and disposal procedures aligned with regulations, employee training programs, and a documented incident response plan. The checklist should be reviewed and updated regularly to reflect changes in regulations and technology.

Regular audits should be conducted to verify compliance with the checklist’s provisions. This proactive approach ensures ongoing adherence to regulations and minimizes risks.

Data Retention and Disposal

Big data governance isn’t just about collecting and analyzing information; it’s also about knowing when to let go. Establishing a robust data retention and disposal strategy is crucial for compliance, security, and cost-effectiveness. Failing to do so can lead to hefty fines, reputational damage, and even legal action. This section delves into the best practices for managing the entire data lifecycle, from creation to destruction.Data retention policies define how long an organization keeps different types of data.

These policies are essential for minimizing risk, ensuring compliance with regulations like GDPR and HIPAA, and optimizing storage costs. A well-defined policy clarifies which data needs to be retained, for how long, and the procedures for secure archiving and deletion. Without clear guidelines, organizations risk accidental deletion of crucial information or the unintentional retention of sensitive data beyond its useful life, exposing themselves to potential vulnerabilities.

Defining Data Retention Policies

A comprehensive data retention policy should clearly Artikel the retention periods for various data categories, based on legal, regulatory, business, and operational requirements. For example, financial records might have a seven-year retention period due to tax regulations, while customer service interactions may only need to be kept for a year. The policy should also specify the format in which data should be stored (e.g., physical archives, cloud storage) and the procedures for data access and retrieval.

Regular reviews and updates to the policy are vital to ensure it remains relevant and compliant with evolving regulations and business needs. A clear escalation path for exceptions and disputes should also be included.

Secure Archiving and Data Deletion Methods

Once the retention period expires, data must be securely archived or deleted. Secure archiving involves transferring data to a separate, secure storage location, often using encryption and access controls to protect it from unauthorized access. Data deletion should employ methods that ensure data is irretrievable, such as data wiping or secure destruction of physical media. Organizations should choose methods appropriate for the sensitivity of the data and comply with relevant regulations.

For example, sensitive personal data might require specialized data sanitization techniques before disposal.

Data Lifecycle Management Strategies

Effective data lifecycle management (DLM) involves planning for the entire journey of data, from its creation to its ultimate disposal. A DLM strategy encompasses data retention policies, archiving procedures, and deletion methods, ensuring data is handled securely and efficiently throughout its lifecycle. It often includes automated processes for data classification, retention scheduling, and disposal, reducing manual effort and minimizing risks.

Regular audits and monitoring are crucial to ensure the DLM strategy remains effective and compliant. One example of a DLM strategy is using a tiered storage approach, where frequently accessed data is stored in faster, more expensive storage, while less frequently accessed data is moved to slower, cheaper storage.

Managing Sensitive Data Throughout its Lifecycle

Handling sensitive data, such as personally identifiable information (PII) or protected health information (PHI), requires extra care. Strict access controls, encryption both in transit and at rest, and regular security audits are essential. Data minimization – collecting and retaining only the necessary data – is a key principle. Data masking and anonymization techniques can be used to protect sensitive data while still allowing for analysis.

Regular employee training on data security and privacy is also crucial. Any breaches or incidents must be reported promptly according to relevant regulations.

Data Retention and Disposal Plan for the Healthcare Industry

The healthcare industry is heavily regulated, with laws like HIPAA dictating strict data retention and disposal requirements for protected health information (PHI). A healthcare data retention and disposal plan must comply with these regulations, specifying retention periods for different types of PHI, such as medical records, billing information, and patient communications. The plan should detail secure archiving and deletion methods, ensuring PHI is protected from unauthorized access or disclosure.

It must also Artikel procedures for handling data breaches and incident reporting. The plan should be regularly reviewed and updated to reflect changes in regulations and best practices. For example, patient medical records might be retained for a minimum of six years, potentially longer depending on state laws and specific circumstances. Disposal of physical medical records might involve secure shredding, while electronic records require secure deletion methods that prevent data recovery.

Big Data Governance and Risk Management

Effective big data governance isn’t just about compliance; it’s about proactively managing the inherent risks associated with handling massive datasets. Without a robust framework, organizations face significant challenges, impacting everything from operational efficiency to brand reputation. This section delves into the critical aspects of risk management within a big data context.

Potential Risks of Inadequate Big Data Governance

Inadequate big data governance creates a breeding ground for various risks. Data breaches, resulting in financial losses and reputational damage, are a major concern. Non-compliance with regulations like GDPR or CCPA can lead to hefty fines and legal battles. Poor data quality can result in flawed business decisions, wasted resources, and missed opportunities. Furthermore, a lack of governance can hinder collaboration and create inconsistencies across different data sets, ultimately impacting the organization’s ability to derive valuable insights.

Finally, the lack of a clear data ownership structure can lead to confusion and conflict, impeding efficient data management.

Strategies for Mitigating Big Data Risks

Mitigating these risks requires a multi-pronged approach. Implementing strong data security measures, including encryption and access controls, is paramount. Regular data audits and vulnerability assessments help identify and address weaknesses in the system. Investing in robust data quality management tools and processes ensures the accuracy and reliability of the data. Establishing clear data ownership and accountability responsibilities prevents confusion and promotes responsible data handling.

Finally, comprehensive training programs for employees on data governance policies and best practices are essential for fostering a culture of data responsibility.

Risk Assessment Methodologies for Big Data Environments

Several methodologies can be used to assess risks in big data environments. One common approach is a qualitative risk assessment, where experts evaluate the likelihood and impact of potential risks based on their experience and knowledge. Quantitative risk assessment methods, on the other hand, use statistical data and models to estimate the probability and potential financial losses associated with specific risks.

A hybrid approach, combining both qualitative and quantitative methods, often provides the most comprehensive view of the risk landscape. For example, a company might use a qualitative assessment to identify potential threats like insider data breaches, then use quantitative methods to estimate the financial impact of such a breach based on historical data and industry benchmarks.

The Role of Risk Management in Ensuring Compliance

Risk management plays a crucial role in ensuring compliance with relevant regulations. By identifying and mitigating potential risks, organizations can demonstrate their commitment to data protection and privacy. A well-defined risk management framework provides a roadmap for complying with legal and regulatory requirements. Regular risk assessments help organizations stay ahead of emerging threats and adapt their data governance strategies accordingly.

This proactive approach not only helps prevent non-compliance but also strengthens the organization’s overall security posture.

Risk Register for a Big Data Project

A risk register is a crucial tool for documenting and managing potential risks. Below is an example:

| Risk | Likelihood | Impact | Mitigation Strategy |

|---|---|---|---|

| Data Breach | High | High (Financial loss, reputational damage) | Implement robust security measures (encryption, access controls), regular security audits |

| Data Loss | Medium | Medium (Operational disruption, data recovery costs) | Implement data backup and recovery procedures, regular data backups to offsite locations |

| Non-Compliance with Regulations | Medium | High (Fines, legal action) | Develop and implement a comprehensive data governance policy, regular compliance audits |

| Poor Data Quality | High | Medium (Inaccurate business decisions) | Implement data quality management processes, data cleansing and validation |

Monitoring and Auditing Big Data Activities

Continuous monitoring and regular auditing are crucial for maintaining the integrity, security, and compliance of big data systems. Without these processes, organizations risk data breaches, regulatory violations, and significant financial losses. Effective monitoring and auditing provide a comprehensive overview of data usage, access patterns, and system performance, allowing for proactive identification and mitigation of potential problems.

The Importance of Continuous Monitoring of Big Data Systems

Continuous monitoring of big data systems is essential for detecting anomalies, security breaches, and performance issues in real-time. This proactive approach enables organizations to respond quickly to threats and prevent significant disruptions. Real-time monitoring provides valuable insights into data access patterns, identifying unusual activity that might indicate malicious intent or accidental data leakage. By continuously tracking key performance indicators (KPIs), such as data processing speed, storage capacity utilization, and query response times, organizations can optimize system performance and ensure efficient resource allocation.

Early detection of performance bottlenecks prevents service degradation and improves overall system efficiency. This also facilitates capacity planning, allowing for proactive scaling of resources to accommodate growing data volumes and user demands. For example, a sudden spike in failed login attempts could signal a brute-force attack, which, if detected early, can be mitigated before any significant damage is done.

Methods for Auditing Big Data Activities to Ensure Compliance

Auditing big data activities involves a systematic examination of data processing, storage, and access to verify compliance with internal policies and external regulations. This includes reviewing access logs, security configurations, and data governance policies to ensure data integrity and security. Audits can be performed periodically, triggered by specific events (e.g., a security incident), or as part of a broader compliance program.

The audit process typically involves defining audit objectives, selecting audit samples, gathering evidence, analyzing findings, and reporting results. Techniques like data profiling, which involves analyzing data characteristics to identify inconsistencies and potential data quality issues, play a vital role in ensuring the accuracy and reliability of data. Data lineage tracking, which maps the journey of data from its origin to its final destination, is also crucial for understanding data transformations and identifying potential vulnerabilities.

For instance, a financial institution might audit its big data systems annually to ensure compliance with regulations like GDPR and CCPA, focusing on areas like data subject access requests and data breach notification procedures.

Examples of Monitoring Tools and Techniques

Several tools and techniques facilitate effective monitoring and auditing of big data activities. These include security information and event management (SIEM) systems, which aggregate and analyze security logs from various sources to detect suspicious activity; data loss prevention (DLP) tools, which monitor data movement and prevent sensitive data from leaving the organization’s control; and big data monitoring platforms, which provide real-time visibility into the performance and health of big data systems.

Specific techniques such as anomaly detection algorithms can identify unusual patterns in data access or system behavior, signaling potential security threats. Data masking and encryption techniques help protect sensitive data while still allowing for analysis and auditing. For example, Splunk is a widely used SIEM system that provides comprehensive monitoring and analysis capabilities for big data environments.

Cloudera Manager offers monitoring and management tools specifically designed for Hadoop-based big data platforms.

Best Practices for Documenting and Reporting on Audit Findings

Comprehensive documentation and reporting are crucial for demonstrating compliance and identifying areas for improvement. Audit findings should be clearly documented, including the date of the audit, the scope of the audit, the methodology used, and a detailed description of any identified issues or non-compliances. Reports should be concise, easy to understand, and tailored to the audience (e.g., management, regulatory bodies).

They should include recommendations for remediation and a plan for addressing identified issues. A well-structured report allows for easy tracking of progress and demonstrates the organization’s commitment to data governance and compliance. For example, a standardized template for audit reports can ensure consistency and facilitate comparison across different audits.

Design of an Audit Trail System for Tracking Data Access and Modifications

An effective audit trail system provides a detailed record of all data access and modifications, enabling organizations to track data lineage, identify unauthorized access, and investigate security incidents. This system should record the user’s identity, the date and time of access, the type of access (e.g., read, write, delete), and the specific data accessed or modified. The system should be designed to be tamper-proof and should store audit logs in a secure and readily accessible location.

The audit trail should be regularly reviewed and analyzed to detect anomalies and ensure compliance with data governance policies. For example, a system could log every instance of data access, including the specific fields accessed, the IP address of the accessing user, and any changes made to the data. This detailed information is crucial for conducting thorough investigations and ensuring accountability.

Training and Awareness Programs

Big data governance and compliance aren’t just about policies and procedures; they’re about people. A robust training and awareness program is crucial for ensuring that employees understand their roles and responsibilities in protecting sensitive data. Without proper training, even the most comprehensive policies can be rendered ineffective. This section Artikels the importance of training, provides examples of effective programs, and details best practices for fostering a culture of compliance.Effective training programs are essential for successful big data governance.

They equip employees with the knowledge and skills to handle sensitive data responsibly, reducing the risk of data breaches and non-compliance. Furthermore, well-designed training programs demonstrate a company’s commitment to data protection, fostering trust among employees and stakeholders. A culture of compliance is built on understanding, not just rules.

Effective Training Program Examples

Several approaches can be adopted for creating effective training programs. For instance, interactive online modules can deliver information efficiently and at the employee’s own pace. These modules could include quizzes and scenarios to test comprehension and application of learned concepts. Alternatively, instructor-led workshops provide opportunities for interactive learning, discussions, and immediate feedback. A blended approach, combining online modules with in-person workshops, often proves to be the most effective, catering to different learning styles and ensuring thorough knowledge absorption.

Furthermore, gamification techniques, such as incorporating challenges and rewards, can increase engagement and knowledge retention. Finally, regular refresher courses are vital to keep employees updated on evolving regulations and best practices.

Best Practices for Creating Awareness About Data Security and Privacy

Creating awareness about data security and privacy involves more than just delivering information; it necessitates a cultural shift. Regular communication is key – newsletters, internal memos, and company-wide announcements can keep data security at the forefront of employees’ minds. Real-life examples of data breaches and their consequences can serve as powerful reminders of the importance of data protection.

Additionally, incorporating data security and privacy into onboarding programs ensures that new hires understand their responsibilities from day one. Encouraging employees to report potential security risks, without fear of reprisal, is also critical. This requires establishing clear reporting channels and assuring employees that their concerns will be addressed promptly and confidentially. Finally, regular security awareness campaigns, perhaps themed around specific data protection events or awareness months, can reinforce good practices and keep the topic engaging.

The Role of Training in Fostering a Culture of Compliance

Training is not just about ticking boxes; it’s about shaping behavior. A well-designed training program can significantly contribute to fostering a culture of compliance within an organization. By emphasizing the importance of data governance and compliance, and by providing employees with the tools and knowledge they need to comply, organizations can cultivate a mindset of responsibility and accountability.

Regular training reinforces the organization’s commitment to data protection and signals to employees that compliance is a top priority. This, in turn, encourages employees to take ownership of data security and privacy, making them active participants in maintaining the organization’s compliance posture. Moreover, including real-world scenarios and case studies in training programs can help employees understand the practical implications of non-compliance, further reinforcing the importance of adhering to data governance policies.

Example Training Program Artikel

This Artikel details a potential training program focused on big data governance and compliance. Module 1: Introduction to Big Data Governance and Compliance

This module will cover the definition of big data governance, its importance, and the legal and regulatory landscape.

Module 2: Data Security and Privacy PrinciplesThis module will delve into data security best practices, including access control, encryption, and data loss prevention. It will also cover relevant privacy regulations such as GDPR and CCPA. Module 3: Data Quality and Management

This module will address data quality issues and best practices for managing big data, including data cleansing, deduplication, and data validation.

Module 4: Compliance with Relevant Regulations

This module will cover specific compliance requirements related to big data, including data retention policies and procedures for handling data breaches.

Module 5: Data Retention and Disposal

This module will detail best practices for data retention and disposal, ensuring compliance with relevant regulations and minimizing risks.

Module 6: Big Data Governance and Risk Management

This module will cover risk assessment methodologies and mitigation strategies for big data.

Module 7: Monitoring and Auditing Big Data Activities

This module will discuss the importance of monitoring and auditing big data activities to ensure compliance.

Module 8: Practical Exercises and Case StudiesThis module will involve hands-on exercises and real-world case studies to solidify learning and promote practical application of concepts.